【ArsTechnica】美国政府开发机器以打败中国的百亿亿次级超级计算机 [美国媒体]

一个40-节点版本产品的画面(别名惠普首创内存驱动计算)。这些好像是连接某种电子芯片的光缆,但是除此之外,无法推断其他细节。美国网友:听起来有点疯狂,如果你的计划需要千兆瓦的电力,你就得同时设计一个发电厂,有意义吗?你拥有一个看起来带辐射的超级计算机,你可能还得在旁边配套个带辐射的电厂,不然计算机就没法用。

US gov’t taps The Machine to beat China to exascale supercomputing

美国政府开发机器以打败中国的百亿亿次级超级计算机

【时间】2017.06.16

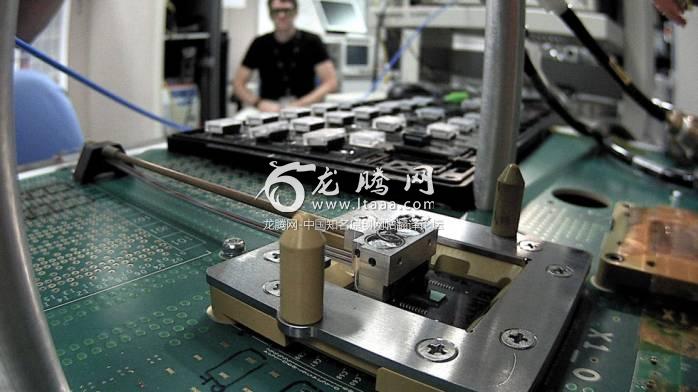

Here's a gallery from a 40-node version of The Machine (aka HPE's Memory-Driven Computing initiative). These appear to be fibre-optic cables connected to some kind of chip, but it's hard to divine much more than that.

一个40-节点版本产品的画面(别名惠普首创内存驱动计算)。这些好像是连接某种电子芯片的光缆,但是除此之外,无法推断其他细节

With China threatening to build the world's first exascale supercomputer before the US, the US Department of Energy has awarded a research grant to Hewlett Packard Enterprise to develop an exascale supercomputer reference design based on technology gleaned from the The Machine, a project that aims to "reinvent the fundamental architecture of computing."

中国计划早于美国建立世界第一个百亿亿次级超级计算机,面对中国的威胁,美国能源部门授予惠普公司经费去开发百亿亿次级超级计算机通过这台旨在改变“重塑计算机基本架构” 的项目中的机器。

The DoE historically operated most of the world's top supercomputers, but in recent years China has taken over in dramatic fashion. China's top supercomputer, Sunway TaihuLight, currently has five times the peak performance (93 petaflops) of Oak Ridge's Titan (18 petaflops). The US has gesticulated grandiosely about retaking the supercomputing crown with an exascale (1,000 petaflops, 1 exaflops) supercomputer that would be operational by 2021ish, but China is seemingly forging ahead at a much faster clip: in January, China's national supercomputer centre said it would have a prototype exascale computer built by the end of 2017 and operational by 2020.

历史上,美国能源部掌管着世界上大多数的顶尖的超级计算机。但是近几年来,中国戏剧性的反超了美国。中国顶尖的超级计算机,神威·太湖之光,其最高93 petaflops(每秒一千兆次的浮点计算能力)是美国橡树岭泰坦计算机18 petaflops的5倍。美国夸张的表示他们要研制一款百亿亿次级(每秒1000petaflops,1exaflops-每秒一百京次)的超级计算机并在2021年前实际运营。不过,中国的百亿亿次级超级计算机的研发脚步似乎更快,在今年一月,中国国家超级计算机中心表示百亿亿次级计算机原型会在年末开始建造并且将在2020年运营。

To create an effective exascale supercomputer from scratch, you must first invent the universe solve three problems: the inordinate power usage (gigawatts) and cooling requirements; developing the architecture and interconnects to efficiently weave together hundreds of thousands of processors and memory chips; and devising an operating system and client software that actually scales to one quintillion calculations per second.

从零开始创造一台百亿亿次级超级计算机,你首先需要解决该领域的三个重要问题:超大量的电力消耗(千兆瓦)以及相关的冷却需求;发明新架构和连接器去并联成百上千的处理机和内存芯片;研发新操纵系统以及驱动程序去操纵每秒艾次级别的演算。

You can still physically build an exascale supercomputer without solving all three problems—just strap together a bunch of CPUs until you hit the magic number—but it won't perform a billion-billion calculations per second, or it'll be untenably expensive to operate. That seems to be China's approach: plunk down most of the hardware in 2017, and then spend the next few years trying to make it work.

不解决这三个问题,你也能造一台百亿亿次级超级计算机,只要把CPU强堆到一个不可思议的数量就可以了。但是这种CPU混合体不能达到每秒百亿亿次级的计算速度,而且呢,这台机器的运营费完全无法估计。中国人似乎就是用这种方式:在2017年购买海量的硬件,然后在未来几年尝试把这些东西拼凑到一起。

The DoE, on the other hand, is wending its way down a more sedate path by funding HPE (and supercomputer makers) to develop an exascale reference design. The funding is coming from a DoE programme called PathForward, which is part of its larger Exascale Computing Project (ECP). The ECP, which was set up under the Obama

administration , has already awarded tens of millions of dollars to various exascale research efforts around the US. It isn't clear how much funding has been received by HPE.

另一方面,美国能源部则通过资助惠普(和其他超级计算机制造商)研究超级计算机的参考设计,这是一种较稳妥的方式。该计划被命名为 “前进道路”,是百亿亿次级超级计算机项目(ECP)的组成部分。ECP项目起初是由奥巴马总统颁布,目前已经为美国各种计算机相关的研究上资助了一千万美金。

Exascale computing in the USA

美国的百亿亿次级计算技术

So, what's HPE's plan? And is there any hope that HPE can pass through three rounds of the DoE funding programme and build an exascale supercomputer before China?

所以,惠普的计划是什么呢?惠普有希望通过美国能源部三次融资计划吗,美国有希望赶超中国吗?

HPE is proposing to build a supercomputer based on an architecture it calls Memory-Driven Computing, which is derived from parts of The Machine. Basically, HPE has developed a number of technologies that allow for a massive amount of addressable memory—apparently up to 4,096 yottabytes, or roughly the same number of atoms in the universe—to be pooled together by a high-speed, low-power optical interconnect that's driven by a new silicon photonics chip. For now this memory is volatile, but eventually—if HP ever commercialises its memristor tech or embraces Intel's 3D XPoint—it'll be persistent.

惠普正在提议建造一个基于内存驱动计算的新型超级计算机。基本上,惠普的新技术允许寻址内存能达到4,096尧字节,大概相当于整个宇宙原子数量之和。在新型硅光子芯片驱动下,整个计算机是高效,节能的。虽然现如今这种内存是极不稳定的,但是如果惠普将其商业化或者选择微软的3D Xpoint技术,那么该项目最终将会坚持下来。

This is apparently one of HPE's X1 silicon photonics interconnect chips (in the middle of the metal clamp thing).

这似乎就是一个惠普硅光子互连芯片(在金属架子中间)

In addition, and perhaps most importantly, HPE says it has developed software tools that can actually use this huge pool of memory, to derive intelligence or scientific insight from huge data sets—every post on Facebook; the entirety of the Web; the health data of every human on Earth; that kind of thing. Check out this quote from CTO Mark Potter, who apparently thinks HPE's tech can save humankind: “We believe Memory-Driven Computing is the solution to move the technology industry forward in a way that can enable advancements across all aspects of society. The architecture we have unveiled can be applied to every computing category—from intelligent edge devices to supercomputers."

此外,最重要的可能是惠普已经研发出能管理如此巨大的内存池的软件。能从地球上所有facebook帖子,网页,大众健康数据等等活动中获得科学结论和情报。从惠普CTO 马克·波特的话可以看出,他显然的认为惠普科技能拯救世人:“我们相信内存驱动计算是推动科技产业前进的解决办法,它能推动社会各个方面。我们公布的架构可以被用于任何计算机的计算范畴,下至智能终端上至超级计算机。”

In practice I think we're some way from realising Potter's dream, but HPE's tech is certainly a good first step towards exascale. If we compare HPE's efforts to the three main issues I outlined above, you'd probably award a score of about 1.5: they've made inroads on software, power consumption, and scaling, but there's a long way to go, especially when it comes to computational grunt.

实际上,我认为我们有好几个方法能实现波特的梦想,但是惠普的技术是迈向百亿亿次级计算机的第一步。如果我们对比惠普的成绩和上述的三个主要问题,你可以得到1.5的评分。惠普已经在软件研发,能源消耗,和缩放比例方面作出了成果,但是惠普依旧有很长的路要走,尤其是计算任务自动化。

After the US government banned the export of Intel, Nvidia, and AMD chips to China, China's national chip design centre created a 256-core RISC chip specifically for supercomputing. All that HPE can offer is the Gen-Z protocol for chip-to-chip communications, and hope that a logic chip maker steps forward. Still, this is just the first stage of funding, where HPE is expected to research and develop core technologies that will help the US reach exascale; only if it gets to phase two and three will HPE have to design and then build an exascale machine.

美国禁止向中国出口因特尔,英伟达和农厂芯片以后,中国国家芯片中心为超级计算机自行研发了一款特殊256-核精简指令集芯片。美国这一边,惠普能提供Gen-Z芯片通信协议,并希望逻辑芯片制造商获得新突破。但这依旧只是投资的第一阶段,该阶段惠普被期望于研究和发展百亿亿次级超级计算机核心技术。只有达到2-3阶段,惠普才有能建造百亿亿次级超级计算机。

Assuming all of the other parts fall into place, Intel's latest 72-core/288-thread Xeon Phi might just be enough for the US to get there before China—but with an RRP of $6,400, and roughly 300,000 chips required to hit 1 exaflops, it won't be cheap.

假设其他条件都满足,因特尔最新的72核/288线程的至强融核处理器的可以让美国领先中国,但是一个RRP是$6,400美金,而且需要大概300,000个芯片才能达到百亿亿次级计算。这并不划算。

Most of the DoE's exascale funding has so far been on software. Just before this story published, we learnt that the DoE is also announcing funding for AMD, Cray, IBM, Intel, and Nvidia under the same PathForward programme. In total, the DoE is handing out $258 million over three years, with the funding recipients also committing to spend at least $172 million of their own funds over the same period. What we don't yet know is what those companies are doing with that funding; hopefully we'll find out more soon.

目前未知,美国能源部的投资大部分是软件方面。就在该文章发布之前,我们发现美国能源部宣布了资助农厂,克雷公司,IBM,因特尔和英伟达并采用相同的“前进道路”计划。总之,美国能源部打算在三年内分发$2亿5千8百万美元投资,同时这些公司也承诺出资至少$1亿7千2百万美元。我们还不知道这些公司会用这笔钱做什么,希望我们能尽快(看到结果)

So this might sound a little crazy but if you're going to require gigawatts of power does it make sense to design a power plant at the same time? You have a radioactive looking supercomputer you may as well put up an actual radioactive power plant next door to make sure you can run it.

听起来有点疯狂,如果你的计划需要千兆瓦的电力,你就得同时设计一个发电厂,有意义吗?你拥有一个看起来带辐射的超级计算机,你可能还得在旁边配套个带辐射的电厂,不然计算机就没法用。

I think the current plan is to keep it at around 20-50 megawatts , file:///C:/Users/LITTLE~1/AppData/Local/Temp/msohtmlclip1/01/clip_image001.gifTo avoid the whole building-your-own-power-station thing.

That's one of the big advantages China has right now - the #1 supercomputer only draws 15MW, while Titan is five times slower but draws only half the energy. Moving to Xeon Phi should help with much of that difference though, I think.

我觉得目前的计划只要20-50兆瓦的电力就行,这样就避免了为了整个发电厂就只为一个计算机而建的问题。

这正是中国目前拥有的一个重大优势,他们的第一超级计算机只消耗15兆瓦,比咱们泰坦计算能力高五倍,反而电耗只有1/2。我觉得换成至强融核处理器(Intel)可能会有不一样的效果。

Can someone explain this to me?

谁能给我解释下这句话吗?

Quote:引用

Basically, HPE has developed a number of technologies that allow for a massive amount of addressable memory—apparently up to 4,096 yottabytes, or roughly the same number of atoms in the universe—to be pooled together by a high-speed, low-power optical interconnect that's driven by a new silicon photonics chip.(基本上,惠普……4,096 尧字节内存)

How can you have that much memory physically available? Are they writing to sub-atomic particles?

他们怎么拥有如此多的可用的物理内存?是不是他们把原子内的粒子算进去了?

Addressable memory. Physical memory'll be of course much smaller. Typical CPU these days can address way bigger range than actual RAM in machine (e.g. 48-bit address space on x86_64 can handle 256 terabytes but PCs only've gigabytes of physical memory). Having addressible > physical gives flexibility / advantages though I wonder what really calls for insane 4096 yottabyte range here.

寻址内存。相比较而言,物理内存当然比较少,目前典型的CPU可处理内存比机器实际RAM范围更大。(例子:48-bit 的地址空间在X86-64架构上能操纵256兆兆字节,但是我们的电脑的物理内存只有千兆字节)。寻址内存的灵活性和优势>物理内存,不过我想要知道4,096尧字节如此疯狂的内存有什么用。

So they are just saying they have a 92-bit address space?

所以他们只是说他们有一个92-bit的地址空间?

Quote:引用

4,096 yottabytes, or roughly the same number of atoms in the universe(全宇宙的原子)

The Earth has a mass of ~6,000 yottagrams, so this can't be close to true.

地球就有6,000尧克,我觉得4,096尧字节不等于宇宙原子总数。

ahh, I was starting to agree and type "Also, 1 gram of Hydrogen has 6.02x10^23 atoms ", but the 'bytes' part is important: He did not say 4096 x 10^24 , 1 yottabyte can store a 2^(8x10^24) number (3 bytes can store 16,777,216 combinations)

啊哈,我一开始也同意,给你解释下 “首先1克氢有6.02x10^23原子。而且文中“字节”是重点:他并没有只说4096 x 10^24个原子,1尧的字节可以存储2^(8x10^24)比特,而3字节则能存储16,777,216个组合”。

Yeah not sure where the author got that from. The universe has ~10^80 atoms. Yotta is 10^24. Even our 'tiny' solar system has ~10^57 atoms.

是啊,我不确定作者从哪儿找到的结论。事实上宇宙有10^80个原子,尧只有10^24。即使是“微小的”太阳系也有10^57个原子。

版权声明

我们致力于传递世界各地老百姓最真实、最直接、最详尽的对中国的看法

【版权与免责声明】如发现内容存在版权问题,烦请提供相关信息发邮件,

我们将及时沟通与处理。本站内容除非来源注明五毛网,否则均为网友转载,涉及言论、版权与本站无关。

本文仅代表作者观点,不代表本站立场。

本文来自网络,如有侵权及时联系本网站。

图文文章RECOMMEND

热门文章HOT NEWS

-

1

Why do most people who have a positive view of China have been to ...

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10

推荐文章HOT NEWS

-

1

Why do most people who have a positive view of China have been to ...

- 2

- 3

- 4

- 5

- 6

- 7

- 8

- 9

- 10